< All posts

Running dockerized services via systemctl

I love running stuff in Docker, and one of the first things I always do on a freshly installed Linux machine is I run a Postgres database and pgAdmin in Docker and configure it to boot on startup. With a new laptop and after doing some Docker preaching at my new workplace, I figured I might as well write about it. 😀

# Advantages

First of all lets talk about the practicalities of this approach. Some advantages I’ve found having my database setup like this is that creating a fresh database is as simple as changing the directory the database stores it’s files in. Need a different Postgres version? Since you are already running one, it’s not as easy as starting an another one through systemd. Well, this solves that issue too! Just expose it on a different port and give it a different directory to store its data in and you’re golden.

I used a database example, but I do the same for nearly everything that I run through systemd. Such as Redis and pgAdmin. If I plan on running SFTP/SMTP servers in the future, you know I’m gonna look up how to do it through Docker. 🙂

# Docker compose file

Let’s create our setup first (use su as needed):

sudo mkdir -p /etc/docker/compose/pg

Note that the pg directory stands for Postgres, your naming may differ. The rest of the post describes setting this

up for Postgres/pgAdmin as well. Let’s define our docker-compose.yml

sudoedit /etc/docker/compose/pg/docker-compose.yml

services:

postgres:

image: postgres:latest

environment:

# You could define it like secrets below but c'mon...

- POSTGRES_PASSWORD=postgres

volumes:

# Feel free to use a different directory just,

# but you really should change your username :)

- /home/marko/.pgdata:/var/lib/postgresql/data

ports:

- "5432:5432"

pgadmin:

image: dpage/pgadmin4:latest

environment:

# Define your email, watch out that there are no blanks after =

- [email protected]

# Just put you password in plain text in here if you

# don't care about providing it through the secret

- PGADMIN_DEFAULT_PASSWORD_FILE=/run/secrets/pg_admin_password

ports:

- "5050:80"

volumes:

- /home/marko/.pgadmin-data:/var/lib/pgadmin

secrets:

- pg_admin_password

# Consider the rest optional for extra security

# if you want to use secrets

secrets:

pg_admin_password:

environment: PG_PASS

# Postgres specific configuration

This chapter is only relevant if you are setting up Postgres & pgAdmin as above. If you defined your password as I did, you can set the env variable with the following:

fish: set -x PG_PASS your_password

bash: PG_PASS="your_password"

If you want to learn more about setting secrets in docker you can read about it here.

Now feel free to start up the docker compose in the same shell:

docker compose up

And enjoy those errors. 😛

On a serious note, let’s take a look at what’s going on, pgAdmin can’t write to the directory we specified (and which

got created), specifically the pgadmin user is not the owner. So we have two options - make the pgadmin user an owner or

just give access to everyone. While we could just chmod 777 -R ~/.pgadmin-data, let’s be a bit fancier.

Docker containers access your volumes with the same user IDs they are running with. Specifically we got the error for

the pgadmin user. So in order to grant it ownership over the directory we need to find the user ID of pgadmin. This

user will not be present in our local /etc/passwd but you can find it inside the container. So let’s do the following:

$ docker ps | grep pgadmin

77a4cc381ee6 dpage/pgadmin4:latest "/entrypoint.sh" About an hour ago Up About an hour 443/tcp, 0.0.0.0:5050->80/tcp, [::]:5050->80/tcp pg-pgadmin-1

Now, exec into the container: docker exec -it 77a4cc381ee6 sh

$ cat /etc/passwd | grep pgadmin

pgadmin:x:5050:0::/home/pgadmin:/sbin/nologin

And there we go! Our user id is 5050, so go ahead and exit the container and make that user the owner of our directory.

sudo chown -R 5050 ~/.pgadmin-data

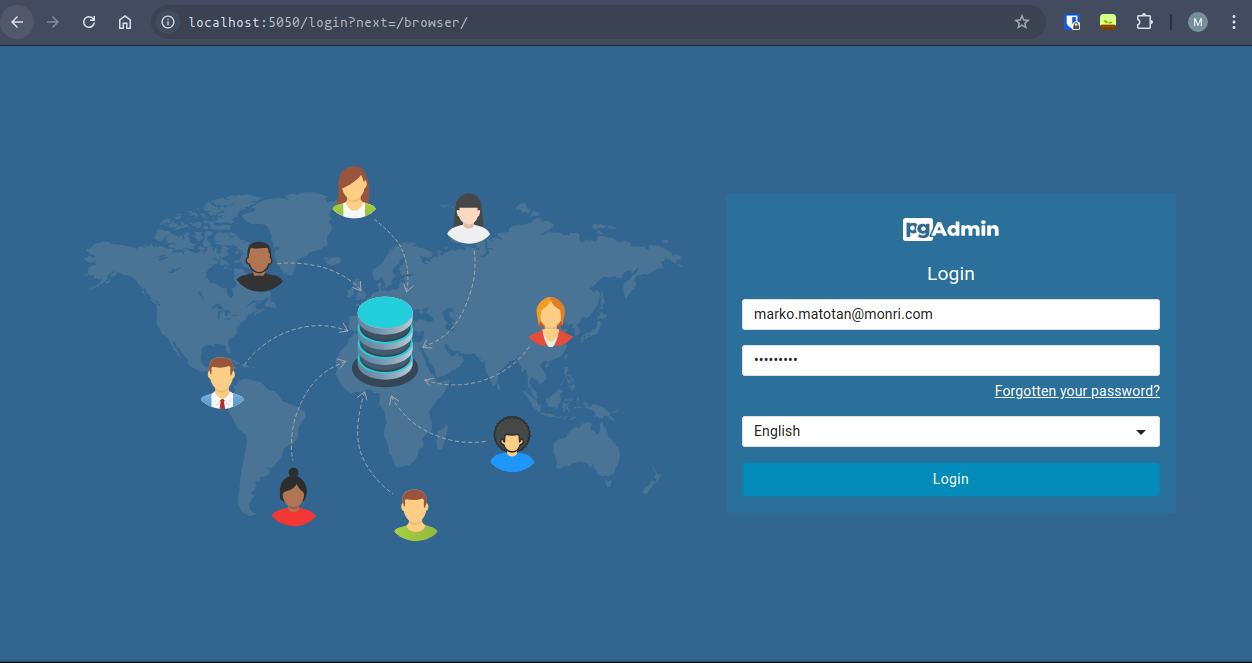

Our errors should disappear instantly after and we can go ahead and try logging in now. We exposed the pgAdmin interface at localhost:5050 (nothing to do with the user ID 5050) and should be able to login there with our email/password combination:

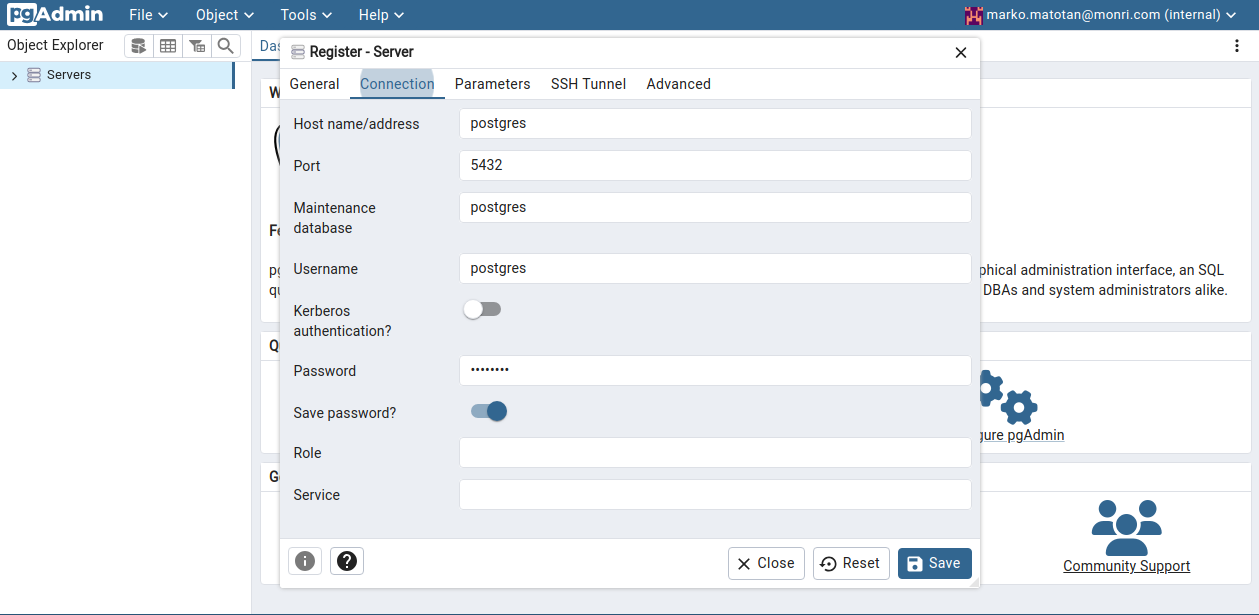

Now this part is not really topic related but to connect to the Postgres database right click on servers and register a

server, name it localhost and if you followed the setup fill it out like the following (password being postgres if you

copied the docker-compose.yaml):

Reason why having the host name as postgres works is because of the way Docker resolves addresses in the same stack. It

maps them out in the internal DNS and makes them available during lookup, read more about it

here.

# Queueing them up in systemctl

Ah and for the finale, I really don’t want to be bothered running docker compose up every time I boot into Linux, so

the following steps will make sure my database is up and running alongside systemd but that it shuts it down safely.

sudoedit /etc/systemd/system/[email protected]

[Unit]

Description=%i service with docker compose

PartOf=docker.service

After=docker.service

[Service]

Type=oneshot

RemainAfterExit=true

WorkingDirectory=/etc/docker/compose/%i

ExecStart=docker compose up -d --remove-orphans

ExecStop=docker compose down

[Install]

WantedBy=multi-user.target

And for my favorite part, lets make sure our stack is downed and start it up through systemctl:

systemctl start [email protected]

Once everything looks good we can enable it so it boots with systemd.

systemctl enable [email protected]

Once again keep in mind that you can do this with any other docker compose setup, just make sure to correctly change directory/file names and use correct commands to run them!